Can You Close the Performance Gap Between GPU and CPU for Deep Learning Models? - Deci

Por um escritor misterioso

Descrição

How can we optimize CPU for deep learning models' performance? This post discusses model efficiency and the gap between GPU and CPU inference. Read on.

Is there a way to Train simultaneously on CPU and GPU? (e.g. 2 separate neural network models) - Quora

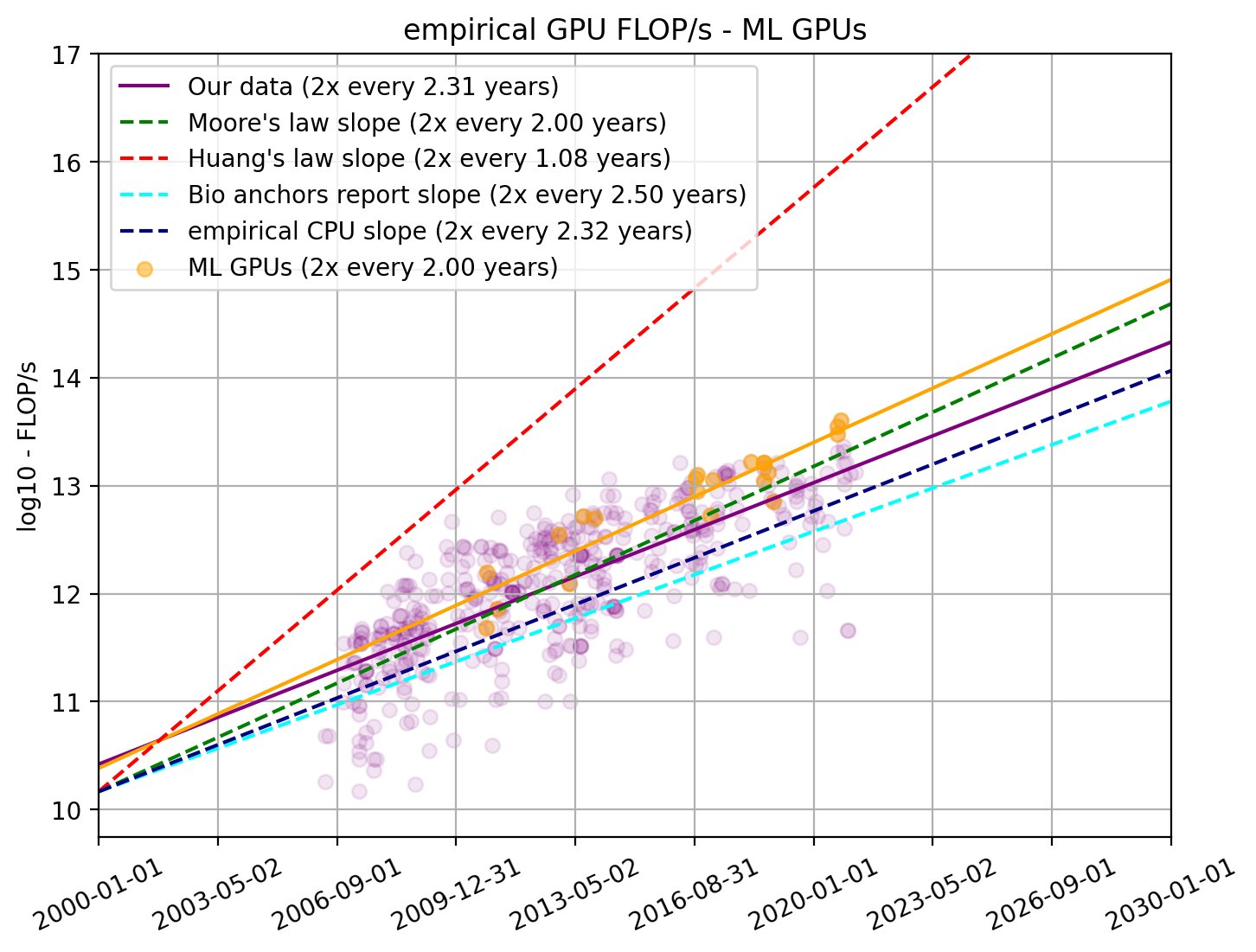

Are all FLOPs created equal?. Originally posted on…, by Amnon Geifman

CPU-Based AI Breakthrough Could Ease Pressure on GPU Market

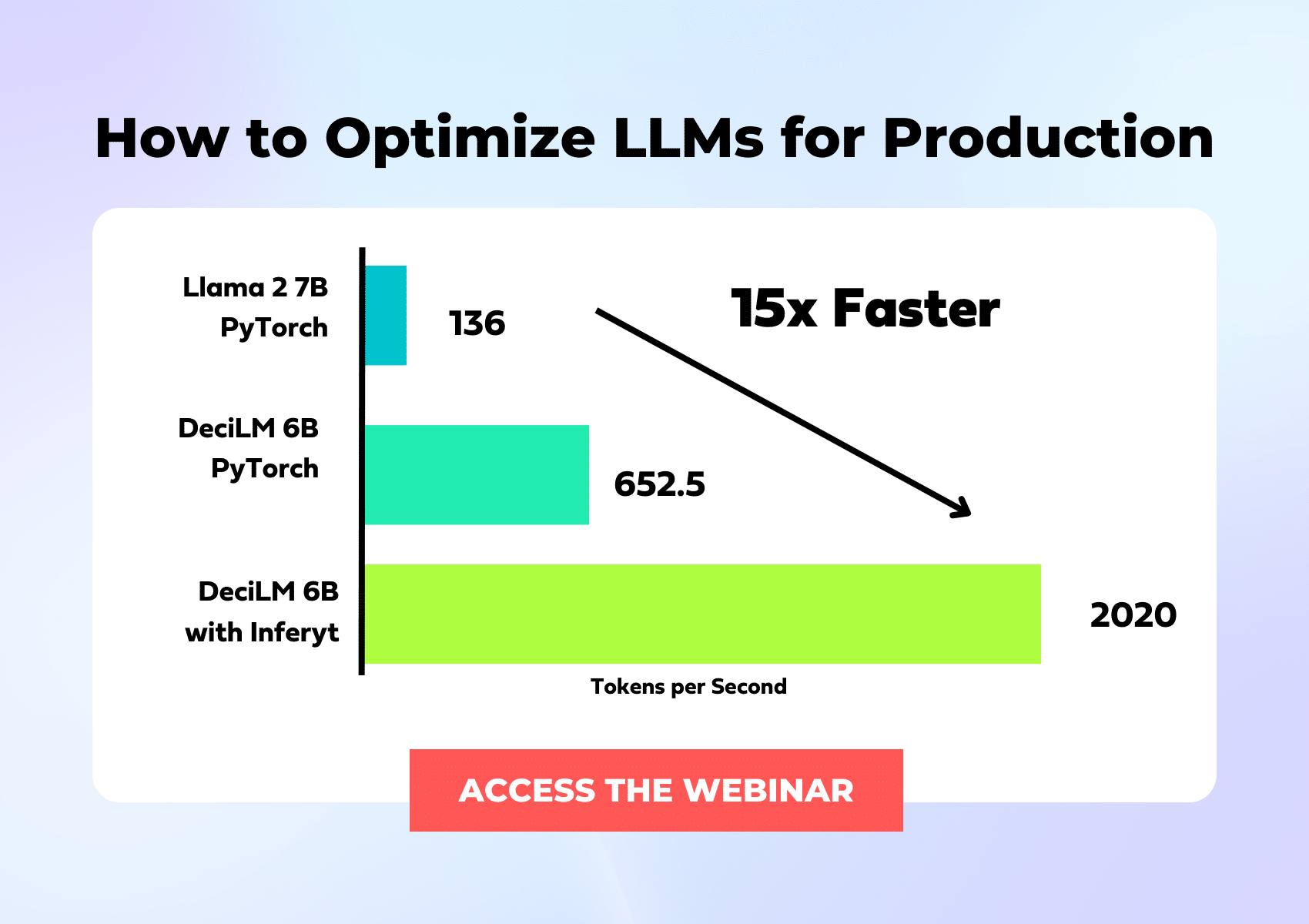

NVIDIA TensorRT-LLM Supercharges Large Language Model Inference on NVIDIA H100 GPUs

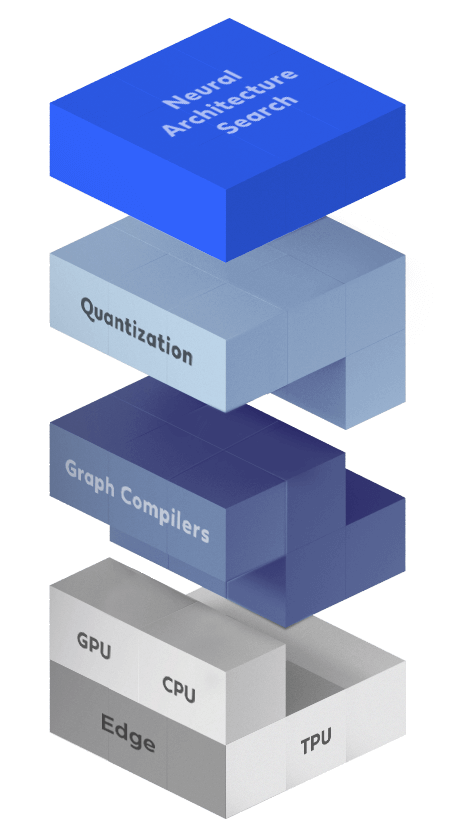

The Ultimate Deep Learning Inference Acceleration Guide

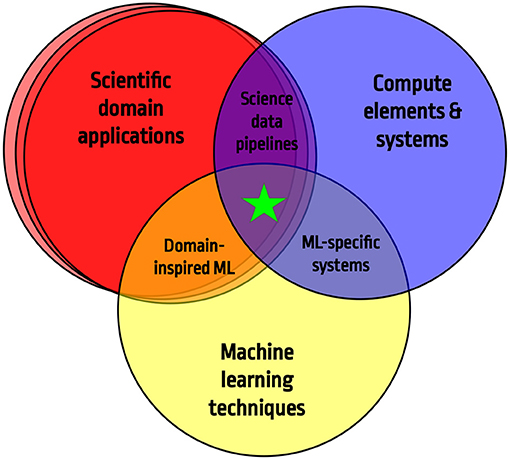

Frontiers Applications and Techniques for Fast Machine Learning in Science

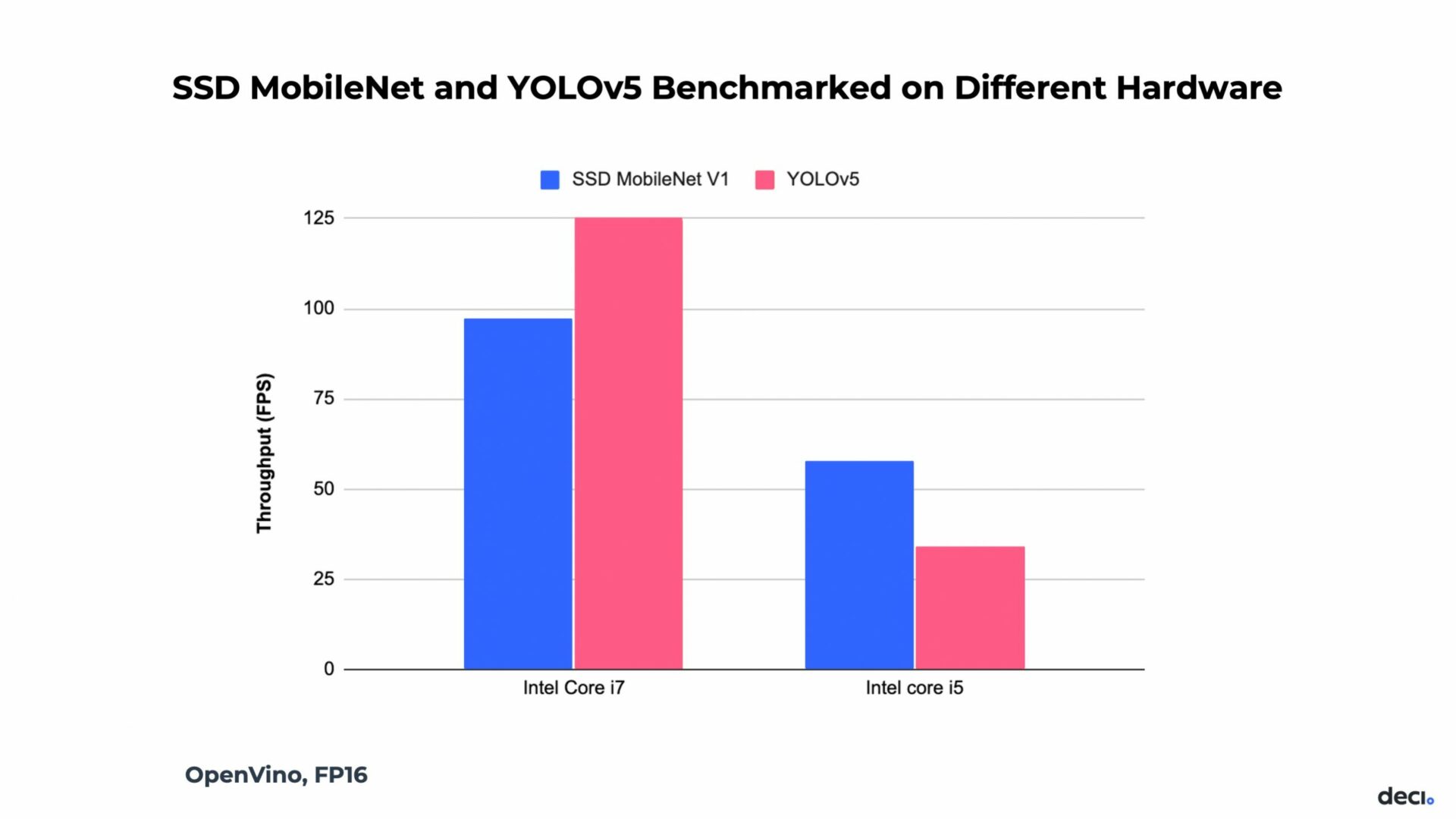

Can You Close the Performance Gap Between GPU and CPU for Deep Learning Models? - Deci

Webinar: Can You Close the GPU and CPU Performance Gap for CNNs?

Tinker-HP: Accelerating Molecular Dynamics Simulations of Large Complex Systems with Advanced Point Dipole Polarizable Force Fields Using GPUs and Multi-GPU Systems

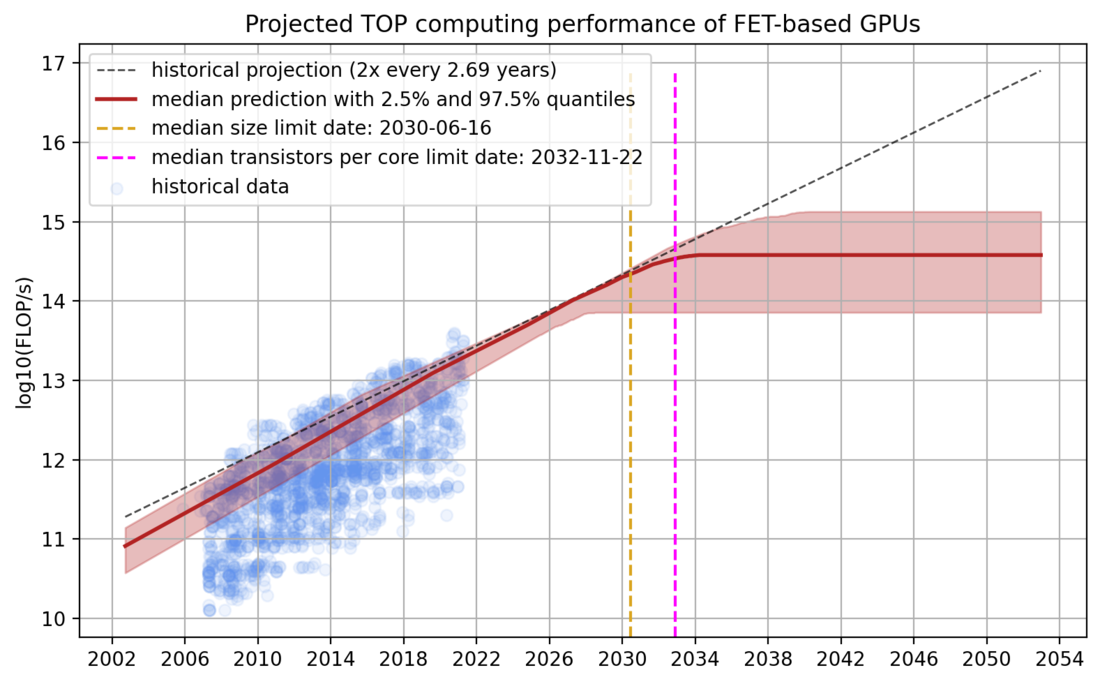

Predicting GPU Performance – Epoch

MFFT: A GPU Accelerated Highly Efficient Mixed-Precision Large-Scale FFT Framework

PDF) Performance of CPUs/GPUs for Deep Learning workloads

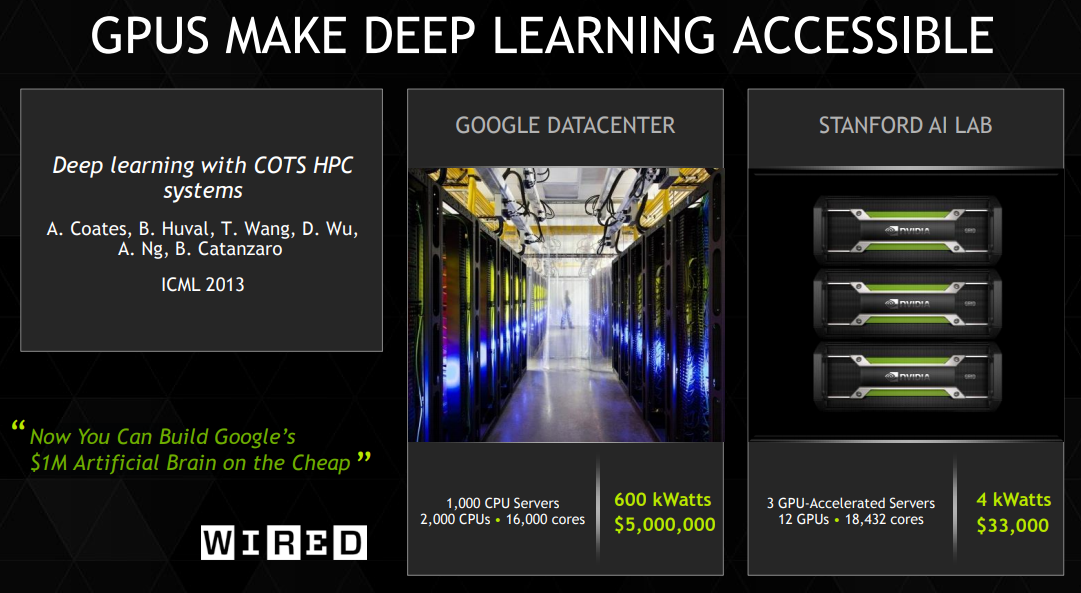

Hardware for Deep Learning. Part 3: GPU, by Grigory Sapunov

Intel CPUs Gaining Optimized Deep Learning Inferencing from Deci in New Collaboration

de

por adulto (o preço varia de acordo com o tamanho do grupo)

:format(jpeg)/cdn.vox-cdn.com/uploads/chorus_image/image/46222242/4.0.0.jpg)