PDF) Incorporating representation learning and multihead attention

Por um escritor misterioso

Descrição

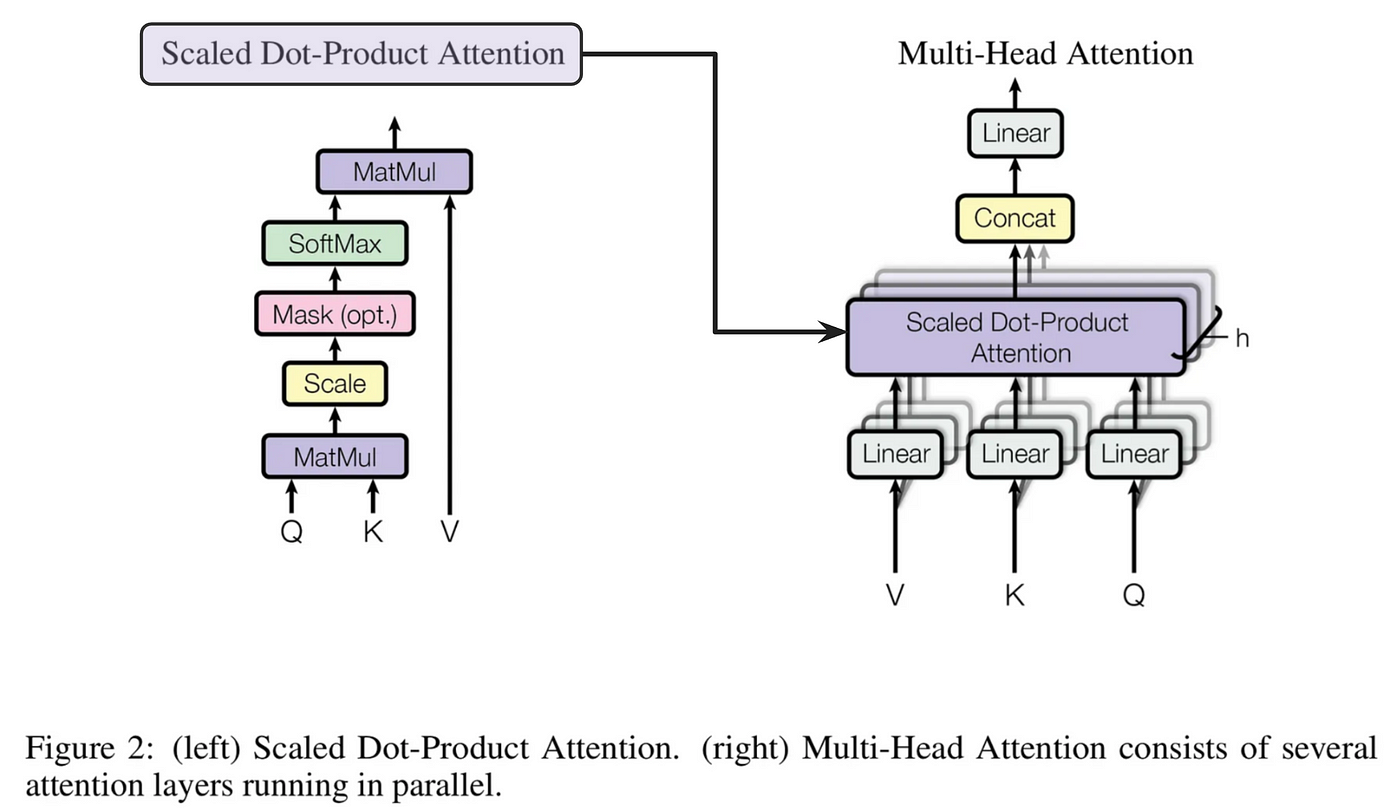

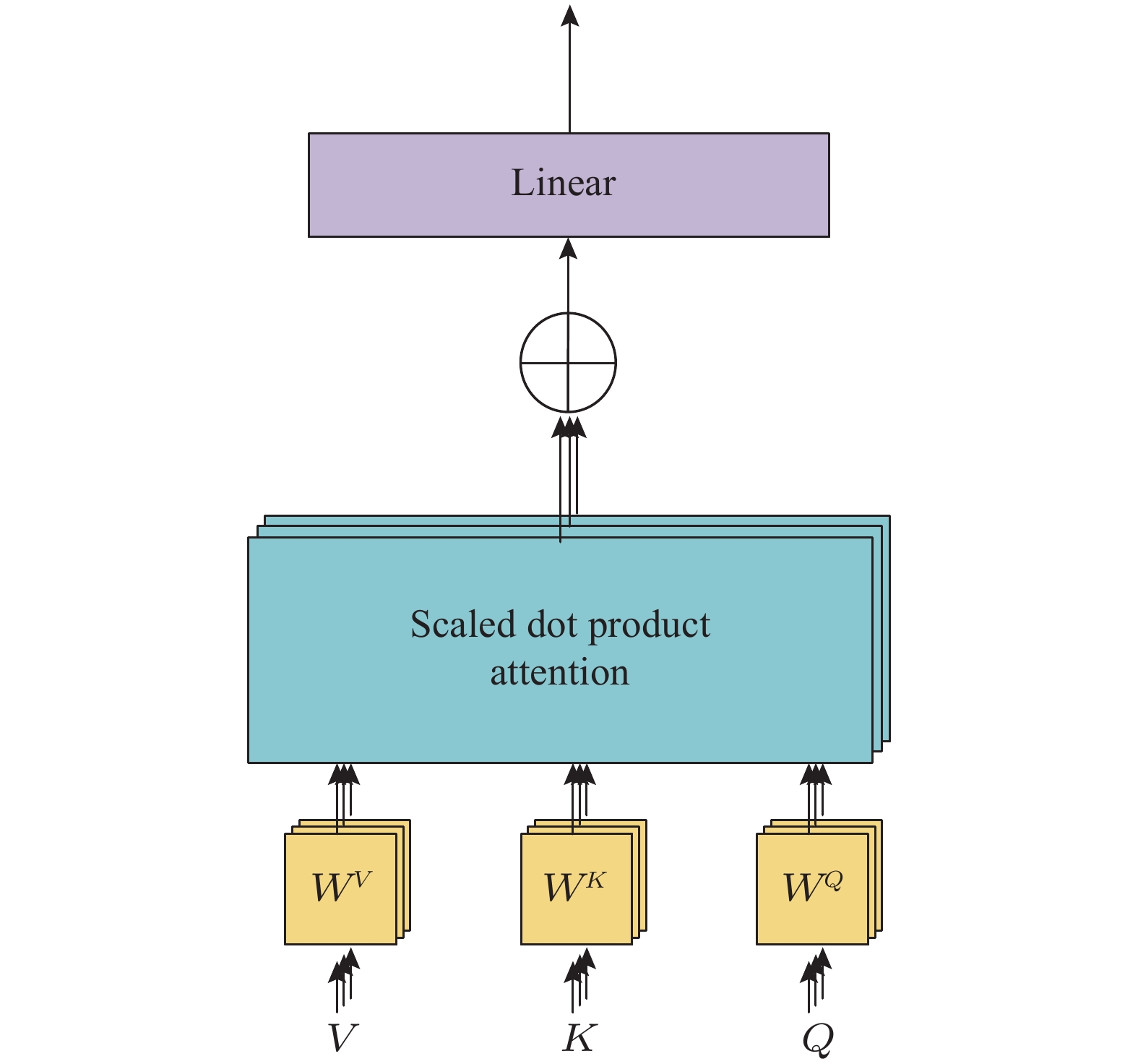

Intuition for Multi-headed Attention., by Ngieng Kianyew

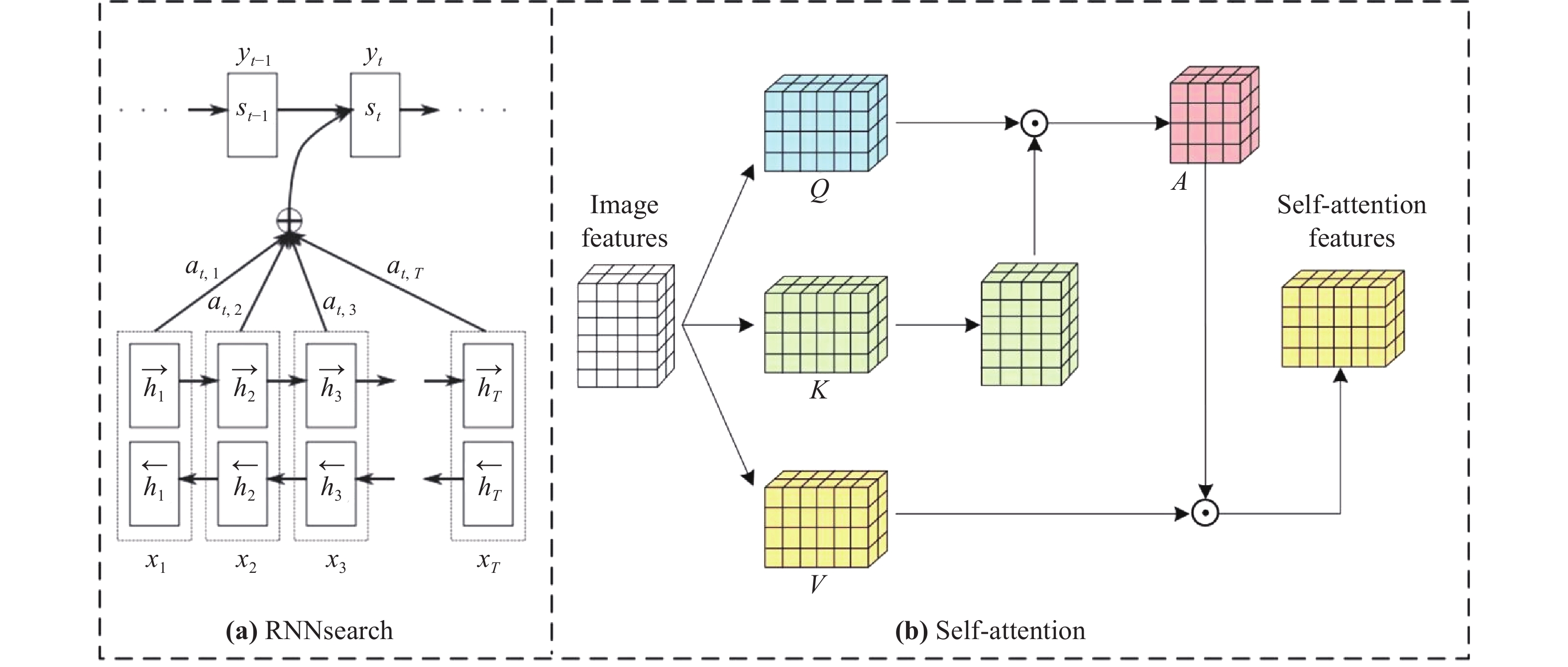

Deep Learning Attention Mechanism in Medical Image Analysis: Basics and Beyonds-Scilight

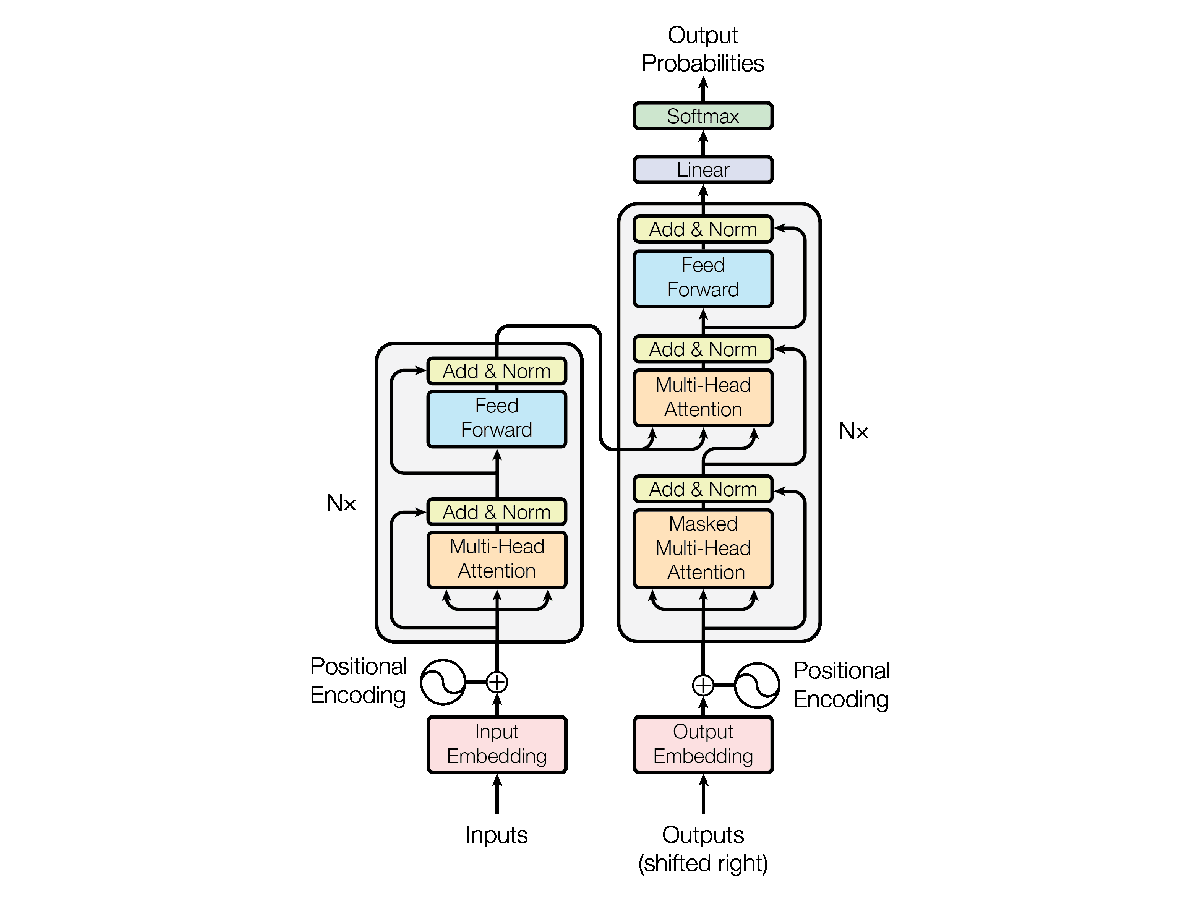

Understanding the Transformer Model: A Breakdown of “Attention is All You Need”, by Srikari Rallabandi, MLearning.ai

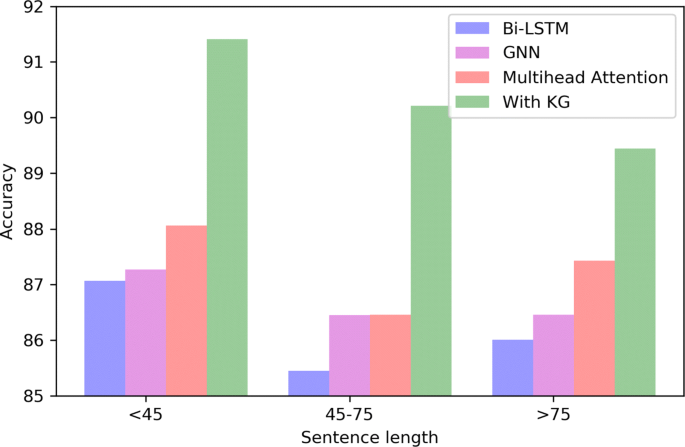

Incorporating representation learning and multihead attention to improve biomedical cross-sentence n-ary relation extraction, BMC Bioinformatics

AttentionSplice: An Interpretable Multi-Head Self-Attention Based Hybrid Deep Learning Model in Splice Site Prediction

RNN with Multi-Head Attention

Build a Transformer in JAX from scratch: how to write and train your own models

PDF) Multi-Head Attention with Diversity for Learning Grounded Multilingual Multimodal Representations

Multi-head enhanced self-attention network for novelty detection - ScienceDirect

de

por adulto (o preço varia de acordo com o tamanho do grupo)