8 Advanced parallelization - Deep Learning with JAX

Por um escritor misterioso

Descrição

Using easy-to-revise parallelism with xmap() · Compiling and automatically partitioning functions with pjit() · Using tensor sharding to achieve parallelization with XLA · Running code in multi-host configurations

7 Parallelizing your computations - Deep Learning with JAX

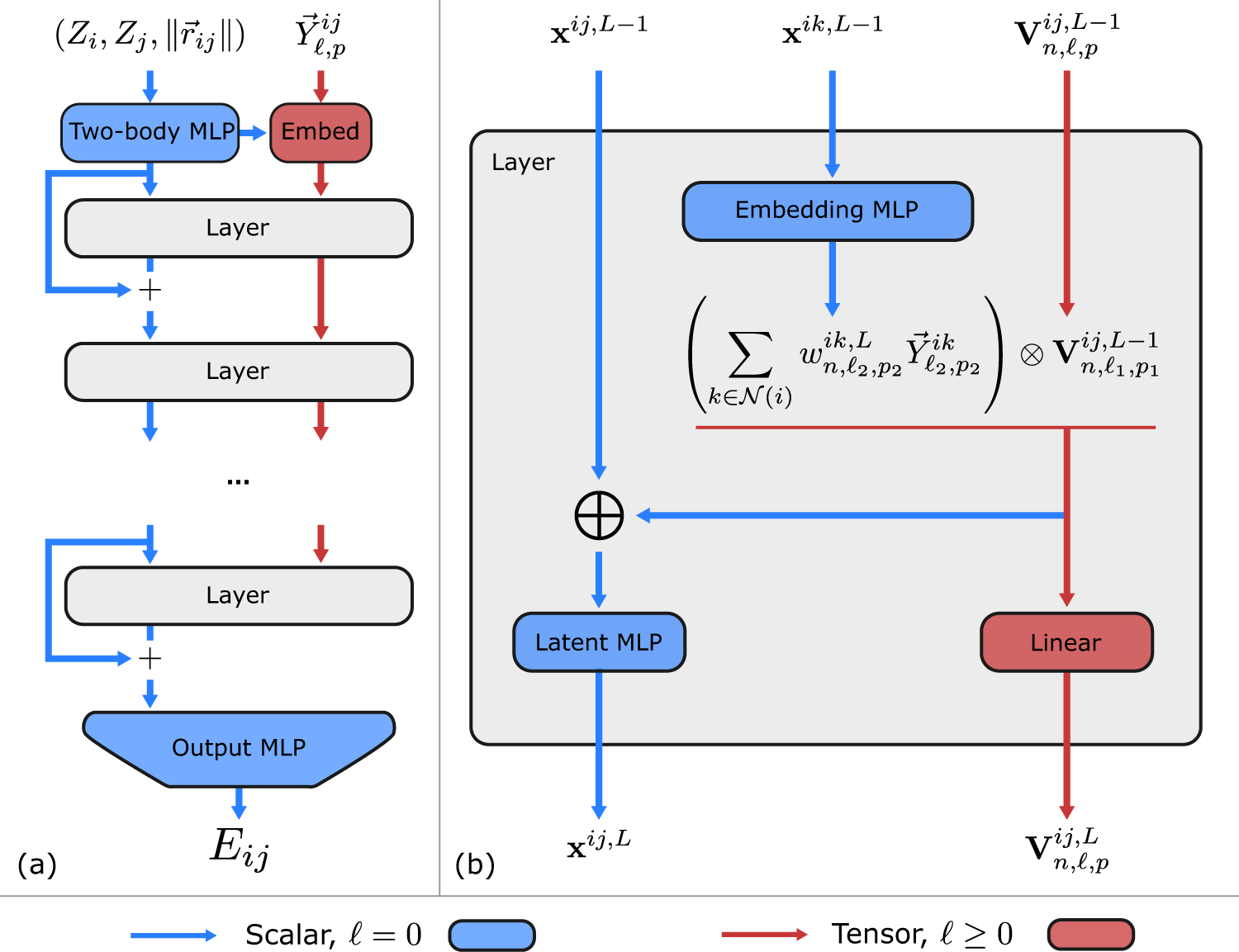

Learning local equivariant representations for large-scale

Grigory Sapunov on LinkedIn: Deep Learning with JAX

Intro to JAX for Machine Learning, by Khang Pham

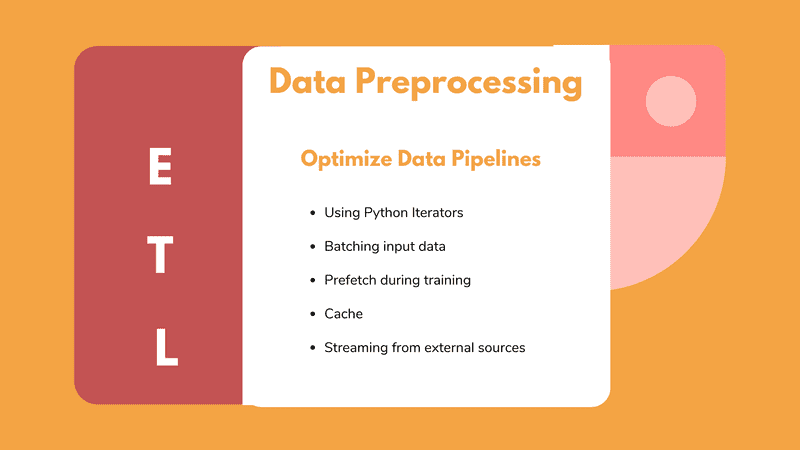

Data preprocessing for deep learning: Tips and tricks to optimize

Learn JAX in 2023: Part 2 - grad, jit, vmap, and pmap

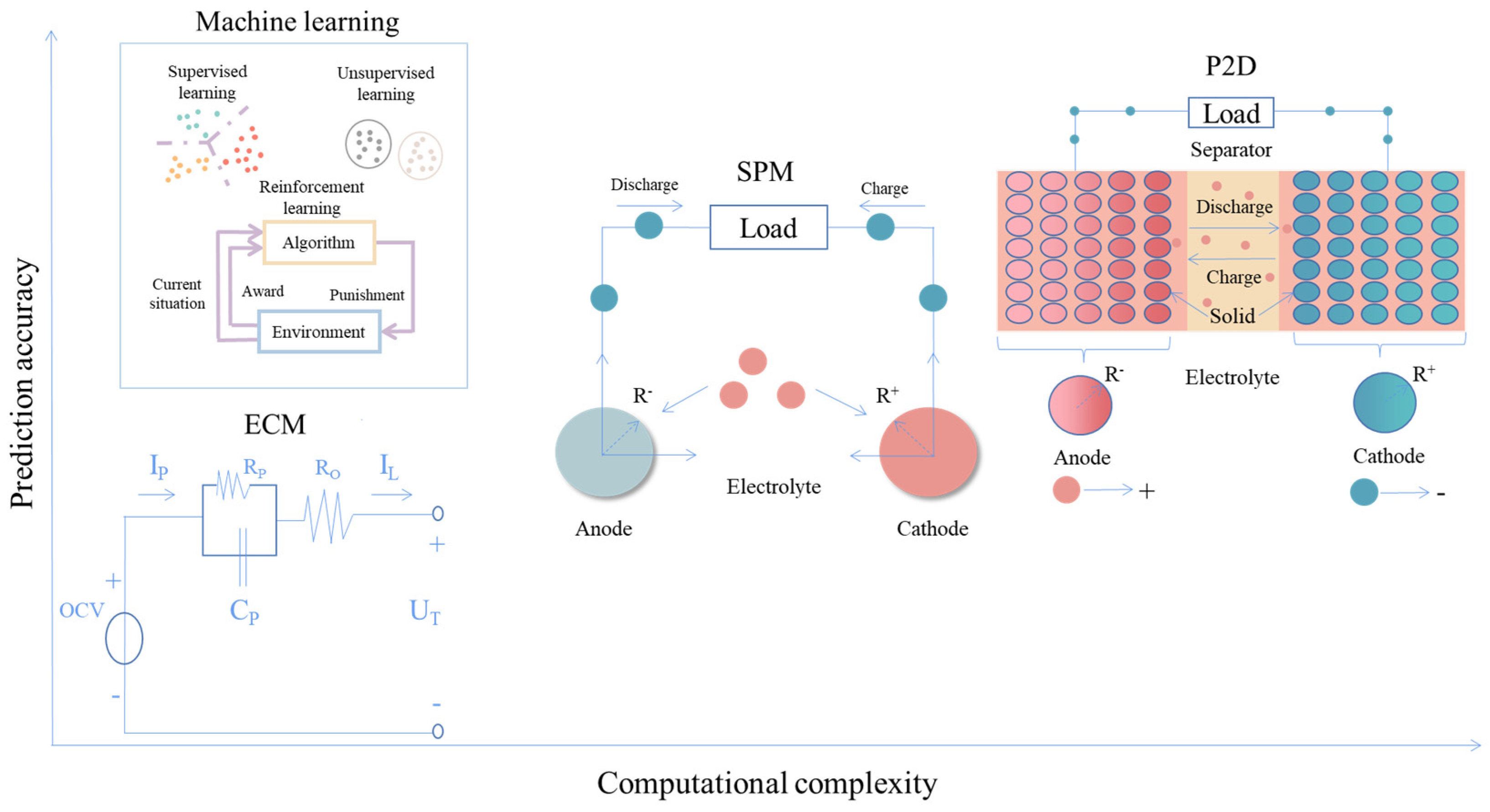

Energies, Free Full-Text

Model Parallelism

Dive into Deep Learning — Dive into Deep Learning 1.0.3 documentation

11.7. The Transformer Architecture — Dive into Deep Learning 1.0.3

Efficiently Scale LLM Training Across a Large GPU Cluster with

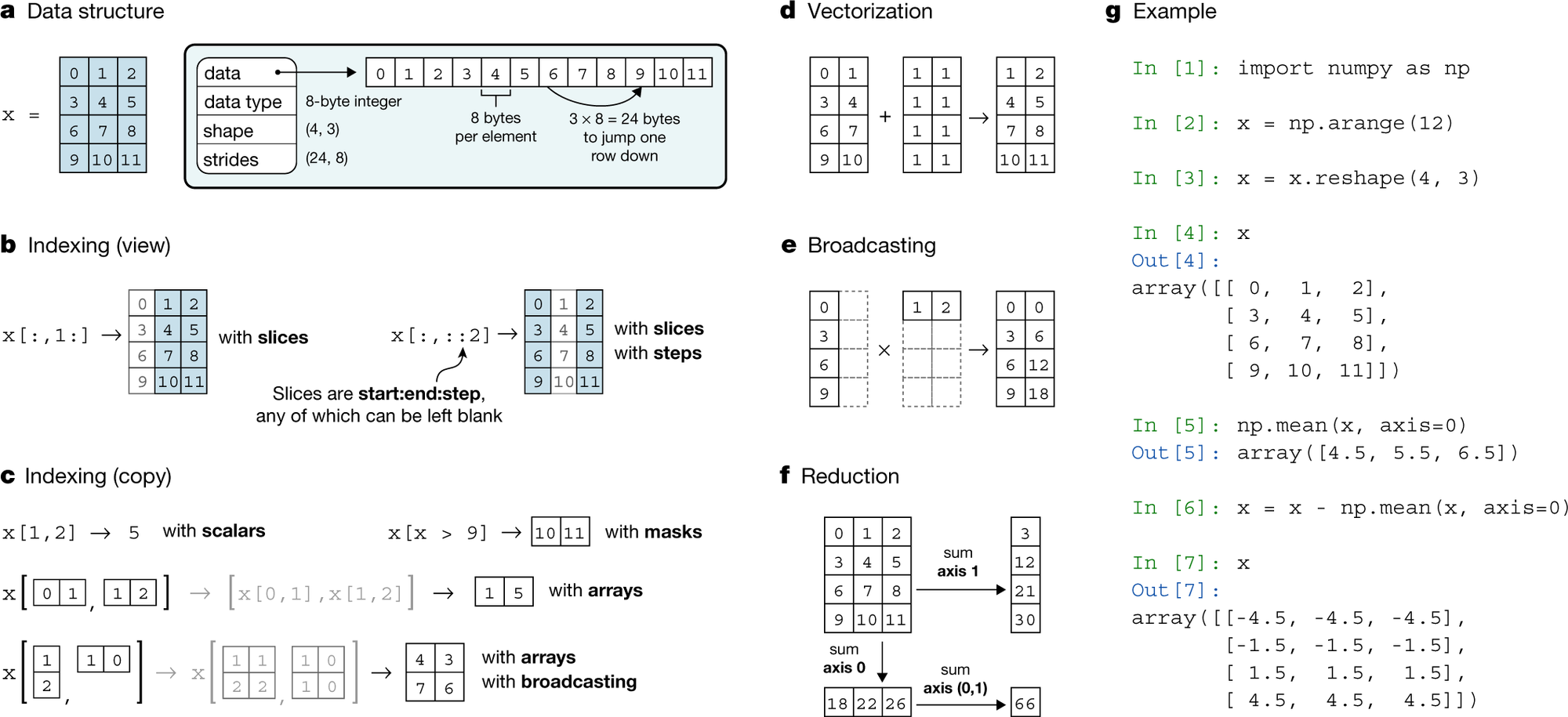

Breaking Up with NumPy: Why JAX is Your New Favorite Tool

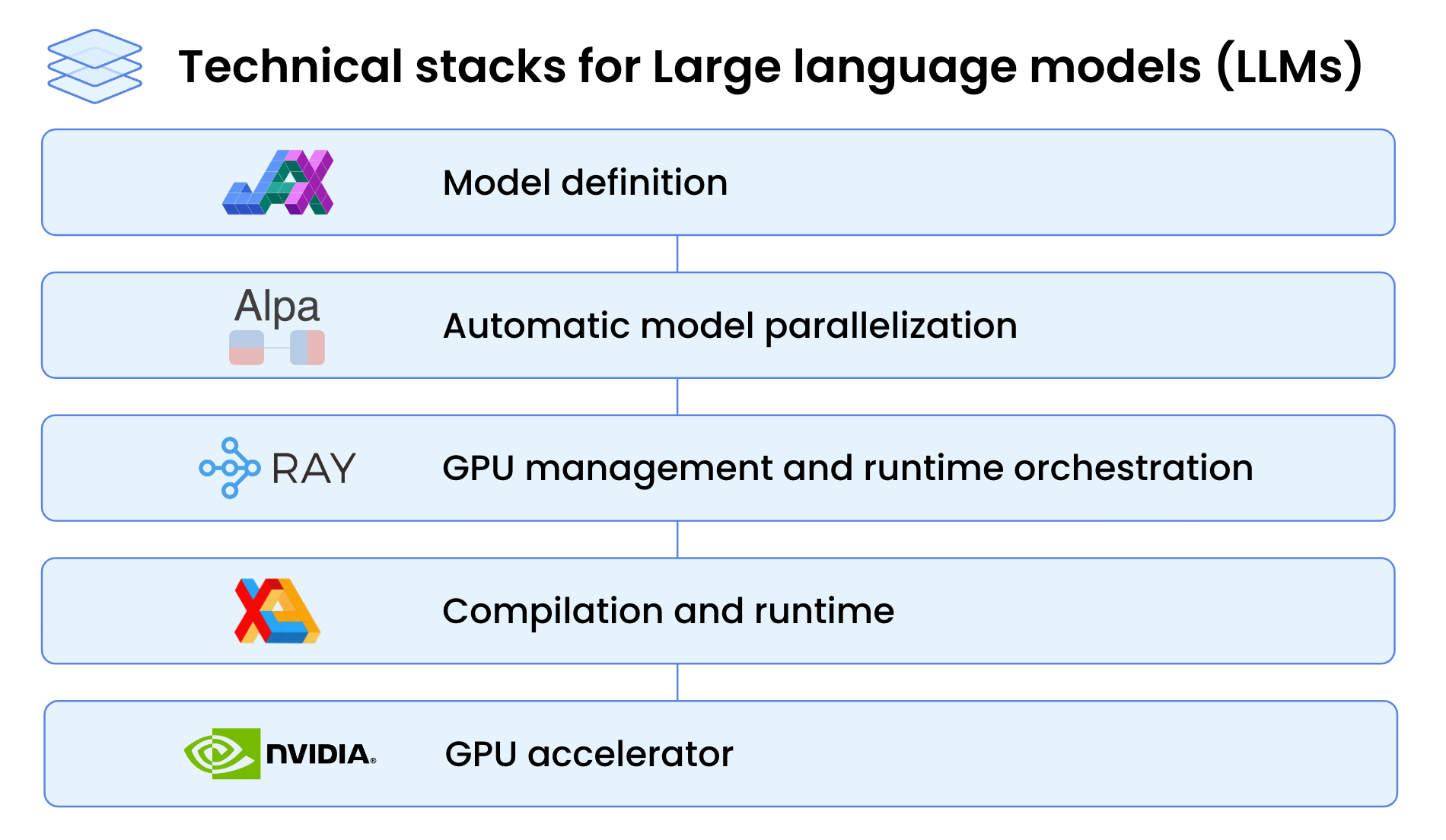

High-Performance LLM Training at 1000 GPU Scale With Alpa & Ray

de

por adulto (o preço varia de acordo com o tamanho do grupo)